Page 6: The Digital Domain: Architecture

Unit 6, Lab 1, Page 6

MF: lightly clean up to make the text more concise

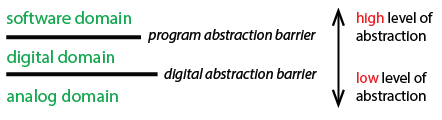

On this page, we shift from software to hardware, starting with the architecture, which is essentially the hardware as it looks to the software.

The software in a computer would be useless without the computer’s hardware: the actual circuitry inside the box. Just as there are layers of abstraction for software, hardware designers also think in layers of abstraction.

Everyone talks about computers representing all data using only two values, 0 and 1. But that’s not really how electronic circuits work. Computer designers can work as if circuits were either off (0) or on (1) because of the digital abstraction, the most important abstraction in hardware. Above that level of abstraction, there are four more detailed levels, called the digital domain. Below the digital abstraction, designers work in the analog domain, in which a wire in a circuit can have any voltage value, not just two values.

On the next four pages, we’ll explore four levels of the digital domain.

The Stored Program Computer

As you’ll see in Lab 2, there have been machines to carry out computations for thousands of years. But the modern, programmable computer had its roots in the work of Charles Babbage in the early 1800s.

Babbage was mainly a mathematician, but he contributed to fields as varied as astronomy and economics. Babbage lived about 150 years ago from 1791-1871. Electricity as a source of energy was unknown. The steam engine came into widespread use around the time he was born. The most precise machinery of his time was clockwork—gears.

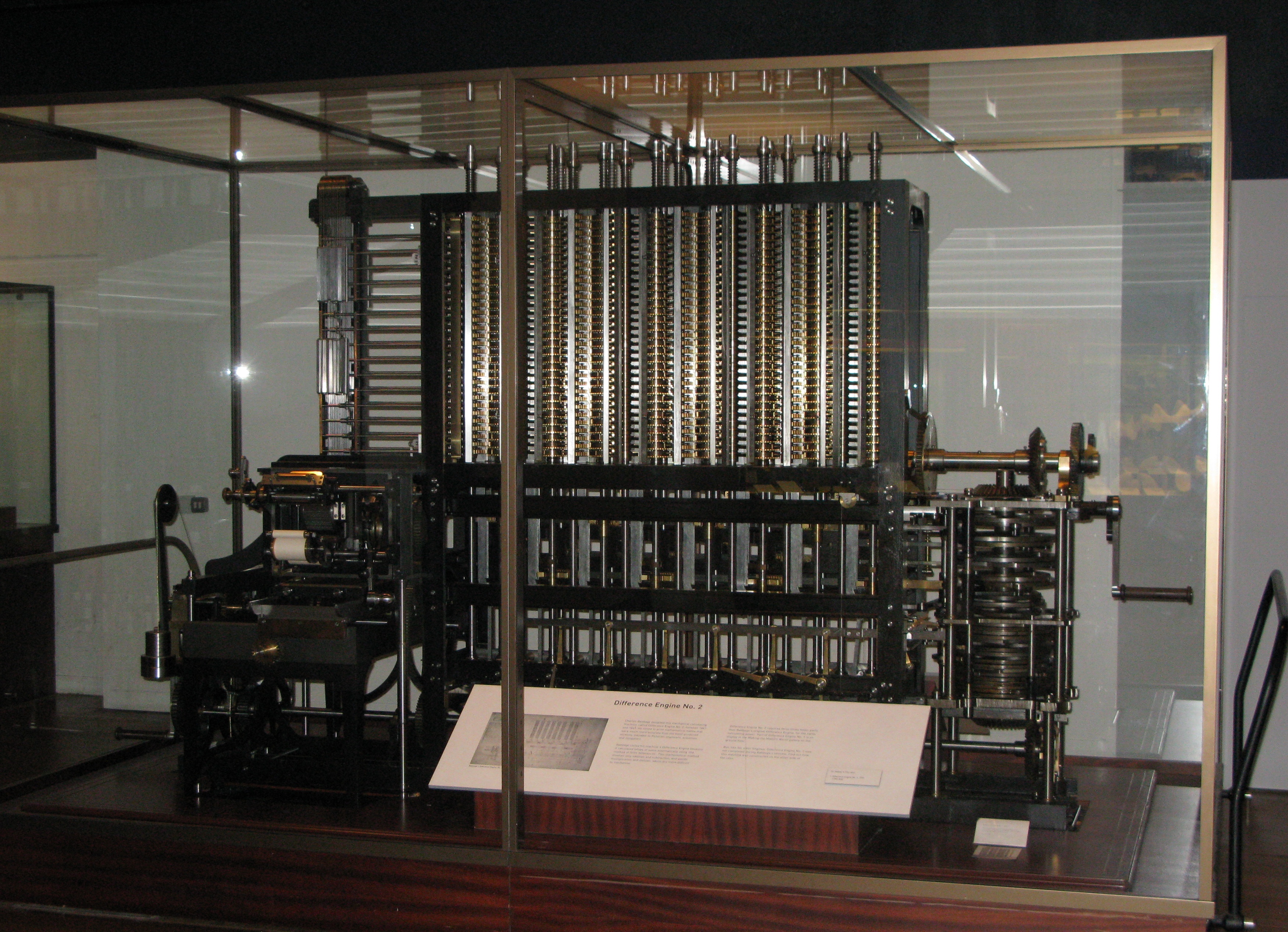

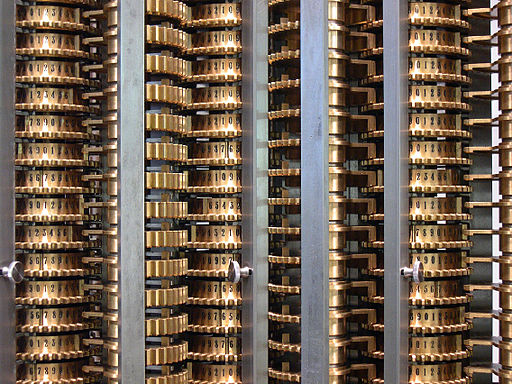

Difference Engine

Babbage’s first computer was the Difference Engine. He used gears to design a complex machine that would compute and print tables of numbers (like the tables of log or trig functions you may have in the back of a math textbook). But these gears needed to be so precise that each one had to be handmade. The project became so expensive that the government stopped funding it, and Babbage never finished a full-scale version.

| |  | The Difference Engine at the London Science Museum | A closeup showing the gears more clearly | Image by Wikimedia user geni. Copyright 2008. License: GFDL, CC BY-SA.| Image by Carsten Ullrich. Copyright 2005. License: CC-BY-SA-2.5. | | The Difference Engine at the London Science Museum | A closeup showing the gears more clearly | Image by Wikimedia user geni. Copyright 2008. License: GFDL, CC BY-SA.| Image by Carsten Ullrich. Copyright 2005. License: CC-BY-SA-2.5. | |

Learn more about the history of the Difference Engine.

In Babbage’s time, such numerical tables were computed by hand by human mathematicians, and they were typeset by hand for printing. Both the computation and the copying into type were error-prone, and accurate tables were needed for purposes ranging from engineering to navigation.

Babbage built a first, small Difference Engine in 1822. This first effort proved that a Difference Engine was possible, but it didn’t have the precision (number of digits in each number) to be practical. In 1823, the British government funded Babbage to build a larger version. Unfortunately, metalsmiths in his day could not produce very precise gears in large quantities; each one had to be handmade. So he spent ten times his approved budget by the time the government canceled the project in 1842.

In 1991, the London Science Museum completed a Difference Engine following Babbage’s original design using gears made by modern processes but at the level of precision that was available to Babbage. This proved that, in principle, Babbage could have completed a working machine, given enough time and money.

The Analytical Engine

The Difference Engine could be used to compute many different functions by manually setting the starting position of various gears. But it had only one algorithm: the one built into the hardware design. In 1833, Babbage began working on the Analytical Engine, which was based on the general idea of the Difference Engine but could carry out instructions in a primitive programming language prepared on punched cards.

Punched cards used to program the Analytical Engine

Karoly Lorentey. Copyright 2004. License: CC-BY.

These days, we are surrounded by programmable computers, and having software seems obvious now. But it wasn’t obvious, and before Babbage, all algorithms were implemented directly in hardware.

So, 150 years ago, Babbage created plans for what is essentially a modern computer, although he didn’t have electronics available. His underlying idea for hardware was entirely mechanical, but it turned out not to be possible for him to build it with then-current technology. We didn’t get usable computers until there was an underlying technology small enough, inexpensive enough, and fast enough to support the software abstraction. You’ll learn about this technology, transistors, soon.

Learn more about the Analytical Engine.

The Analytical Engine, like modern computers, had an arithmetic processor (called the “mill”) and a separate memory (the “store”) that would hold 1,000 numbers, each with up to 40 digits. The mill did arithmetic in decimal (with digits 0-9 equally spaced around each gear); using just “ones and zeros” in computing came later.

The programming language used in the Analytical Engine included conditionals and looping, which is all you need to represent any algorithm. (It could loop because it could move forward or backward through the punched cards containing the program.)

Alas, Babbage could build only a small part of the Analytical Engine, which would have required even more metalworking than the Difference Engine. His notes about the design weren’t complete, and so nobody has ever built a working model, although there are simulations available on the Web (see the Take It Further problem below). Sadly, in the early days of electronic computers, Babbage’s work was not widely known, and people ended up reinventing many of his ideas.

Learn about Ada, Countess Lovelace’s invention of symbolic computing.

Although his design was very versatile, Babbage was mainly interested in printing tables of numbers. It was his collaborator Augusta Ada King-Noel, Countess of Lovelace, who first recognized that the numbers in Babbage’s computer could be used not only as quantities but also as representing musical notes, text characters, and so on.

Image by Alfred Edward Chalon, Science & Society Picture Library, Public Domain, via Wikimedia.

Much of what we know today about Babbage’s design comes from Ada Lovelace’s extensive notes on his design. Her notes included the first published program for the Analytical Engine, and so she is widely considered “the first programmer,” although it’s almost certain that Babbage himself wrote several example programs while designing the machine.

Whether or not she was truly the first programmer, historians agree that she did something more important: she invented the idea of symbolic computation (including text, pictures, music, etc.) as opposed to numeric computation. This insight paved the way for all the ways that computers are used today, from movies on demand to voice-interactive programs such as Siri and Alexa.

The abstraction of software (a program stored in the computer’s memory) is what makes a computer usable for more than one purpose.

What’s an Architecture?

The Analytical Engine (described above) was the first programmable computer architecture. The processor in the computer you are using today understands only one language, its own machine language—not Java, not C, not Snap!, not Python, nor anything else. Programs written in those other languages must first be translated into machine language.

The most important part of the architecture is the machine language, the set of ultra-low-level instructions that the hardware understands. This language is like a contract between the hardware and the software: The hardware promises to understand a set of instructions, and the software compiles programs from human-friendly language into those instructions.

Machine language is the lowest-level programming language; it is directly understood by the computer hardware.

Architecture is an abstraction, a specification of the machine language. It also tells how the processor connects to the memory. It doesn’t specify the circuitry; the same architecture can be built as circuitry in many different ways.

One important part of an architecture is the number of wires that connect the processor and memory. This is called the width of the architecture, measured in bits (number of wires). A wider computer can process more data in one instruction.

What does machine language look like?

Consider the Snap! instruction  . In a lower-level language such as C or Java, the same idea would be written as:

. In a lower-level language such as C or Java, the same idea would be written as:

c = a+b;

That simple command might be translated into six machine language instructions (slightly simplified here):

movq_c, %rcx movq_b, %rdx movq_a, %rsi movl(%rsi), %edi addl(%rdx), %edi movl%edi, (%rcx)

This notation, called assembly language, is a line-by-line equivalent to the actual numeric instruction codes but is slightly more readable.

The first three instructions load the addresses of the three variables into registers inside the processor. The names with percent signs, such as %rcx, refer to specific processor registers. Movq is the name of a machine language instruction. (It abbreviates “move quote,” which says to move a constant value into a register. Note that a is a variable, but the address of a is a constant value — the variable doesn’t move around in the computer’s memory.)

The next instruction, movl (“move long”), says to move a word from one place to another. Putting a register name in parentheses, like (%rsi), means to use the memory location whose address is in the register. In this case, since the third movq put the address of a into register %rsi, the first movl says to move the variable a from memory into a processor register. Then the addl instruction says to add the variable b into that same register. Finally, the value in register %edi is moved into the memory location containing variable c.

You wouldn’t want to have to program in this language! And you don’t have to; modern architectures are designed for compilers, not for human machine language programmers.

::: endnote Learn about:

-

Most computer processors (the part that carries out instructions) in desktop or laptop computers use an architecture called “x86” that was designed at Intel, a chip manufacturer. The first processor using that architecture was called the 8086, released in 1978. (The reason for the name x86 is that the first few improved versions were called 80286, 80486, and so on.) The original 8086 was a 16-bit architecture; since then 32-bit (since 1985) and 64-bit (since 2003) versions have been developed. Even with all the refinements of the architecture, the new x86 processors are almost always backward compatible, meaning that today’s versions will still run programs that were written for the original 8086.

Why did the x86 architecture come to rule the world? The short answer is that IBM used it in their original PC, and all the later PC manufacturers followed their lead because they could run IBM-compatible software unmodified. But why did IBM choose the x86? There were arguably better competing architectures available, such as the Motorola 68000 and IBM’s own 801. The PC designers argued about which to use, but in the end, what made the difference was IBM’s long history of working with Intel.

The Apple Macintosh originally used the Motorola 68000 architecture, and in 1994 Apple designed its own PowerPC architecture in a joint project with IBM and Motorola, but in 2006 they, too, switched to the x86, because Intel keeps producing newer, faster versions of the x86 more often than other companies could keep up.

-

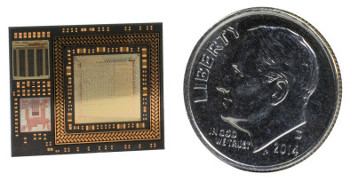

Everything about smartphone architecture is determined by the tiny size of the space inside the case. The height and width of the phone are constrained by the size of people’s front pockets. (Don’t keep your phone in your back pants pocket. That’s really bad both for the phone and for your back.) The front-to-back depth of a phone could be much bigger than it is, but for some reason phone manufacturers compete on the thinness of their phones, which gives designers even less room inside.

As a result, many components that would be separate from the processor chip in a computer are instead part of the same chip in a phone. These components may include some or all of a cellular modem, a WiFi modem, a graphics processor (another processor that specializes in parallel arithmetic on lists of numbers), memory, a GPS receiver to find your phone’s physical location, circuitry to manage the power depletion and recharging of the battery, and more. These days, the chip is likely to include two, four, or even eight copies of the actual CPU, to make multicore systems. This collection of components is called a system on a chip, or SoC.

Intel made an x86-based (that is, the same architecture used in PCs) low-power SoC called the Atom, which was used in a few Motorola phones and some others made by companies you’ve never heard of. It was made to support Android, Linux, and Windows phones.

But the vast majority of phones use the ARM architecture, which (unlike the x86) was designed from the beginning to be a low-power architecture. The acronym stands for Advanced RISC Machine. It’s available in 32-bit and 64-bit configurations.

The name stands for Reduced Instruction Set Computer, as opposed to the CISC (Complex Instruction Set Computer) architectures, including the x86. The instruction set of an architecture is, as you’d guess from the name, the set of instructions that the processor understands. A RISC has fewer instructions than a CISC, but it’s simpler in other ways also. For example, a CISC typically has more addressing modes in its instructions. In the x86 architecture, the

addinstruction can add two processor registers, or a register and a value from the computer’s memory, or a constant value built into the instruction itself. A RISC architecture’saddinstruction just knows how to add two registers (perhaps putting the result into a third register), and there are separateloadandstoreinstructions that copy values from memory to register or the other way around. Also, in a RISC architecture, all instructions are the same length (say, 32 bits) whereas in a CISC architecture, instruction lengths may vary. These differences matter because a RISC can be loading the next instruction before it’s finished with the previous instruction, and a RISC never has more than one memory data reference per instruction.So why don’t they use a RISC architecture in PCs? At one time Apple used a RISC processor called the PowerPC in its Macintosh computers, but the vast majority of computers sold are PCs, not Macs, and as a result Intel spends vast sums of money on building faster and faster circuits implementing the x86 architecture. The moral is about the interaction between different levels of abstraction: A better architecture can be overcome by a better circuit design or better technology to cram components into an integrated circuit.

The company that designed the ARM, called ARM Holdings, doesn’t actually build processors. They license either the architecture design or an actual circuit design to other companies that integrate ARM processors into SoCs. Major companies that build ARM-based processor chips include Apple, Broadcom, Qualcomm, and Samsung. Smartphone manufacturers buy chips from one of these companies.

-

embedded architecture and the “Internet of Things”

::: {#hint-architecture-iot .collapse} You can buy thermostats with computers in them, refrigerators with computers in them, fuzzy animal toys with computers in them—more and more things, as time goes on. Modern automobiles have several computers in them, largely for safety reasons; you wouldn’t want the brakes to fail because the DVD player has a problem. The goal, as described by researchers in computing, is “smart dust,” meaning that lots of computers could be floating around a building unnoticed. What good is an unnoticed computer? This is a classic dual use technology. The beneficial use everyone talks about is emergency response to disasters; it would be a great help to the fire department to know, from the outside, which rooms of a building have people in them. But another use for this technology would be spying.

NXP Freescale SCM-i.MX6D chipFor embedded computing, the main design criteria are small size and low power consumption. The chip in the picture above is based on the ARM architecture, like most cell phones. That’s actually a big embedded-systems chip; the Kinetis KL02 MCU (micro controller unit) fits in a 2 millimeter square—less than 1/10 inch. That’s still too big to float in the air like dust, but imagine it in a sticky container and thrown onto the wall.

Someday, the spying will be even more effective (along with, we hope, treatment for diseases of the brain): ARM targets your brain with new implantable chips (Engadget, 5/17/2017).

Intel made a button-sized x86-compatible chip in 2015, but announced in 2017 that it would be discontinued, leaving only ARM and PowerPC-based processors competing in this market. :::

-

hobbyist computer architecture

In one sense, any architecture can be a hobbyist architecture. Even back in the days of million-dollar computers, there were software hobbyists who found ways to get into college computer labs, often by making themselves useful there. Today, there are much more powerful computers that are cheap enough that hobbyists are willing to take them apart. But there are a few computer architectures specifically intended for use by hobbyists.

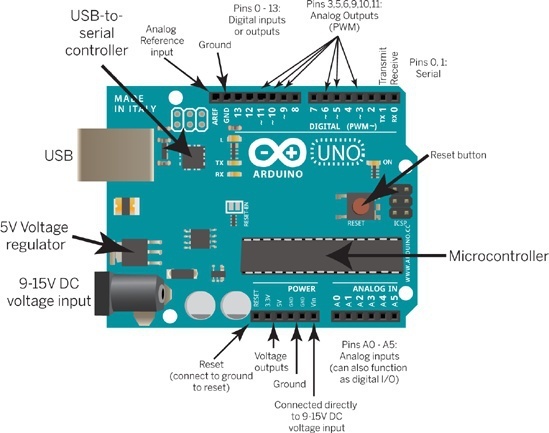

By far the most popular computer specifically for hobbyists is the Arduino. It’s a circuit board, not just a processor. Around the edges of the board are connectors. On the short edge on the left in the picture are the power input, which can connect to a power supply plugged into the wall or to a battery pack for a mobile device such as a robot, and a USB connector used mainly to download programs from a desktop or laptop computer. On the long edges are connectors for single wires connected to remote sensors (for light, heat, being near a wall, touching another object, etc.) or actuators (stepping motors, lights, buzzers, etc.).

One important aspect of the Arduino design is that it’s free (“free as in freedom”). Anyone can make and even sell copies of the Arduino. This is good because it keeps the price down (the basic Arduino Uno board costs $22) and encourages innovation, but it also means that there can be incompatible Arduino-like boards. (The name “Arduino” is a trademark that can be used only by license from Arduino AG.)

The processor in most Arduino models is an eight-bit RISC system with memory included in the chip, called the AVR, from a company called Atmel. It was designed by two (then) students in Norway, named Alf-Egil Bogen and Vegard Wollan. Although officially “AVR” doesn’t stand for anything, it is widely believed to come from “Alf and Vegard’s RISC.” There are various versions of the AVR processor, with different speeds, memory capacities, and of course prices; there are various Arduino models using the different processors.

Unlike most (“von Neumann architecture”) computers, the AVR (“Harvard architecture”) separates program memory from data memory. (It actually has three kinds of memory, one for the running program, one for short-term data, and one for long-term data.) Babbage’s Analytical Engine was also designed with a program memory separate from its data memory.

Why would you want more than one kind of memory?

There are actually two different design issues at work in this architecture. One is all the way down in the analog domain, having to do with the kind of physical circuitry used. There are many memory technologies, varying in cost, speed, and volatility: volatile memory loses the information stored in it when the device is powered off, while non-volatile memory retains the information. Here’s how memory is used in the AVR chips:

-

EEPROM (512 Bytes–4kBytes) is non-volatile, and is used for very long term data, like a file in a computer’s disk, except that there is only a tiny amount available. Programs on the Arduino have to ask explicitly to use this memory, with an EEPROM library.

- The name stands for Electrically Erasable Programmable Read-Only Memory, which sounds like a contradiction in terms. In the early days of transistor-based computers, there were two kinds of memory, volatile (Random Access Memory, or RAM) and nonvolatile (Read-Only Memory, or ROM). The values stored in an early ROM had to be built in by the manufacturer of the memory chip, so it was expensive to have a new one made. Then came Programmable Read-Only Memory (PROM), which was read-only once installed in a computer, but could be programmed, once only, using a machine that was only somewhat expensive. Then came EPROM, Erasable PROM, which could be erased in its entirety by shining a bright ultraviolet light on it, and then reprogrammed like a PROM. Finally there was Electrically Erasable PROM, which could be erased while installed in a computer, so essentially equivalent to RAM, except that the erasing is much slower than rewriting a word of RAM, so you use it only for values that aren’t going to change often.

-

SRAM (1k–4kBytes): This memory can lose its value when the machine is turned off; in other words, it’s volatile. It is used for temporary data, like the script variables in a Snap! script.

- The name stands for Static Random Access Memory. The “Random Access” part differentiates it from the magnetic tape storage used on very old computers, in which it took a long time to get from one end of the tape to another, so it was only practical to write or read data in sequence. Today all computer memory is random access, and the name “RAM” really means “writable,” as opposed to read-only. The “Static” part of the name means that, even though the memory requires power to retain its value, it doesn’t require periodic refreshing as regular (“Dynamic”) computer main memory does. (“Refreshing” means that every so often, the computer has to read the value of each word of memory and rewrite the same value, or else it fades away. This is a good example of computer circuitry whose job is to maintain the digital abstraction, in which a value is zero or one, and there’s no such thing as “fading” or “in-between values.”) Static RAM is faster but more expensive than dynamic RAM; that’s why DRAM is used for the very large (several gigabytes) memories of desktop or laptop computers.

-

Flash memory (16k–256kBytes): This is the main memory used for programs and data. Flash memory is probably familiar to you because it’s used for the USB sticks that function as portable external file storage. It’s technically a kind of EEPROM, but with a different physical implementation that makes it much cheaper (so there can be more of it in the Arduino), but more complicated to use, requiring special control circuitry to maintain the digital abstraction.

- “More complicated” means, for example, that changing a bit value from 1 to 0 is easy, but changing it from 0 to 1 is a much slower process that involves erasing a large block of memory to all 1 bits and then rewriting the values of the bits you didn’t want to change.

So, that’s why there are physically different kinds of memory in the AVR chips, but none of that completely explains the Harvard architecture, in which memory is divided into program and data, regardless of how long the data must survive. The main reason to have two different memory interface circuits is that it allows the processor to read a program instruction and a data value at the same time. This can in principle make the processor twice as fast, although that much speed gain isn’t found in practice.

To understand the benefit of simultaneous instruction and data reading, you have to understand that processors are often designed using an idea called pipelining. The standard metaphor is about doing your laundry, when you have more than one load. You wash the first load, while your dryer does nothing; then you wash the second load while drying the first load, and so on until the last load. Similarly, the processor in a computer includes circuitry to decode an instruction, and circuitry to do arithmetic. If the processor does one thing at a time, then at any moment either the instruction decoding circuitry or the arithmetic circuitry is doing nothing. But if you can read the next instruction at the same time as carrying out the previous one, all of the processor is kept busy.

This was a long explanation, but it’s still vastly oversimplified. For one thing, it’s possible to use pipelining in a von Neumann architecture also. And for another, a pure Harvard architecture wouldn’t allow a computer to load programs for itself to execute. So various compromises are used in practice.

Atmel has since introduced a line of ARM-compatible 32-bit processors, and Arduino has boards using that processor but compatible with the layout of the connectors on the edges.

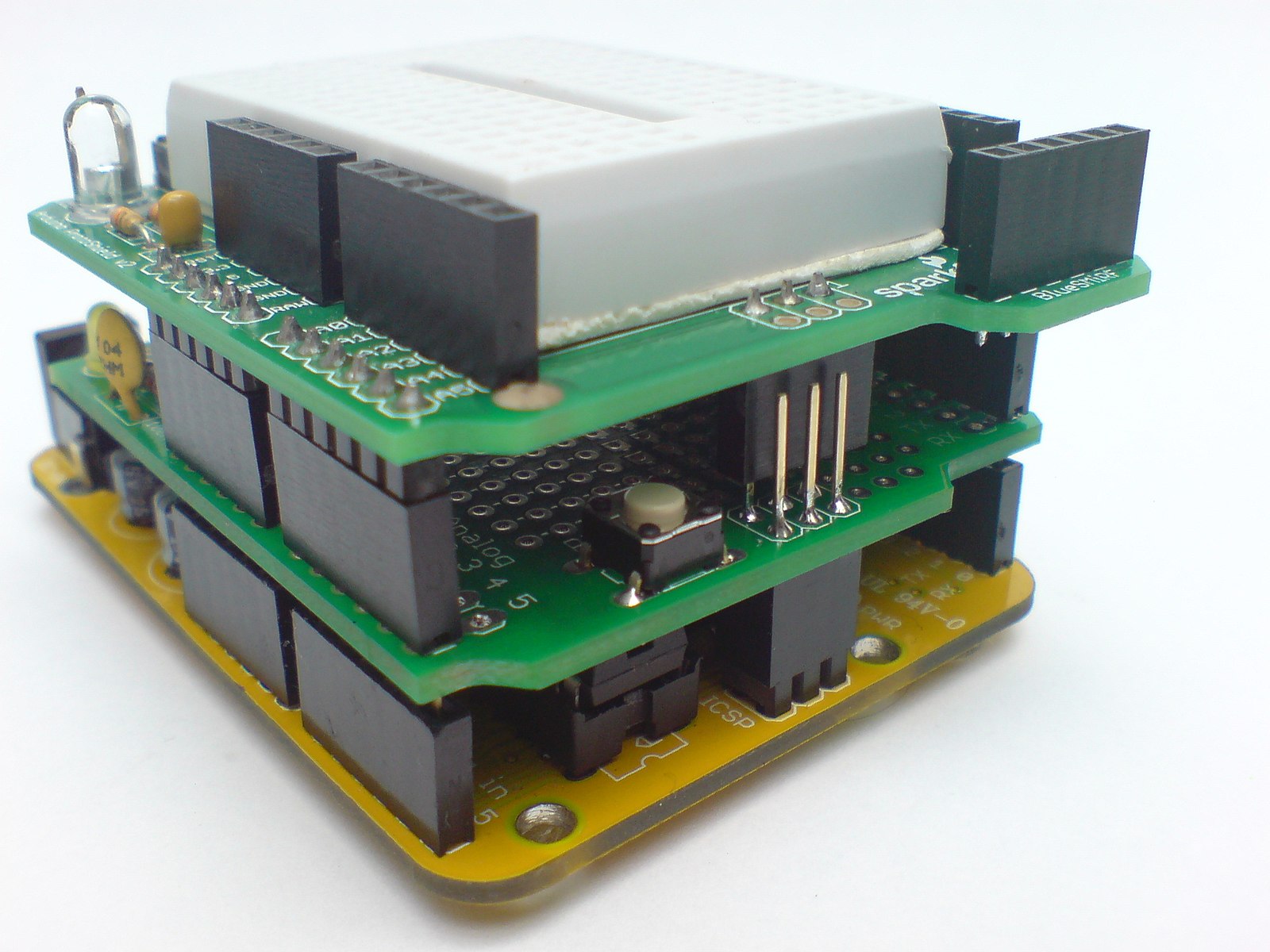

One thing that has contributed to the popularity of the Arduino with hobbyists is the availability of shields, which are auxiliary circuit boards that plug into the side edge connectors and have the same connectors on their top side. Shields add features to the system. Examples are motor control shields, Bluetooth shields for communicating with cell phones, RFID shields to read those product tags you find inside the packaging of many products, and so on. Both the Arduino company and others sell shields.

Stack of Arduino shields

Image by Wikimedia user Marlon J. Manrique, CC-BY-SA 2.0.

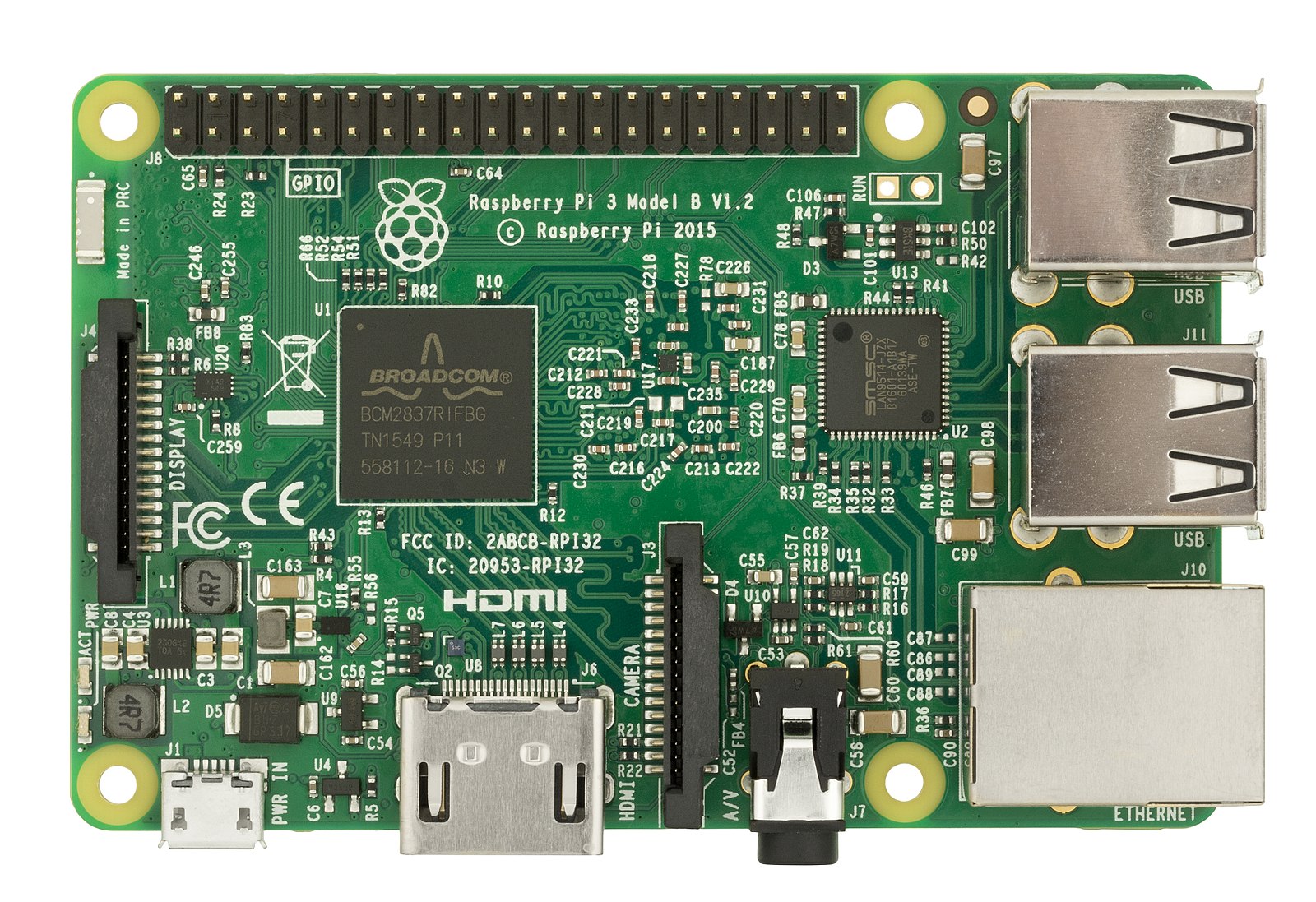

A completely different hobbyist architecture is the Raspberry Pi. It was designed to be used like a desktop or laptop computer, but with more access to its electronics. It uses an ARM-compatible processor, like most cell phones, but instead of running phone operating system software such as Android, it runs “real” computer operating systems. It ships with Linux, but people have run Windows on it.

The main thing that makes it exciting is that it’s inexpensive: different models range in price from $5 to $35. That price includes just the circuit board, as in the picture, without a keyboard, display, mouse, power adapter, or a case. The main expense in kit computers is the display, so the Pi is designed to plug into your TV. You can buy kits that include a minimal case, a keyboard, and other important add-ons for around $20. You can also buy fancy cases to make it look like any other computer, with a display, for hundreds of dollars.

Because the Pi is intended for educational use, it comes with software, some of which is free for anyone, but some of which generally costs money for non-Pi computers. One important example is Mathematica, which costs over $200 for students (their cheapest price), but is included free on the Pi.

Like the Arduino, the Pi supports add-on circuit boards with things like sensors and wireless communication modules.

Raspberry Pi board

Image by Evan Amos, via Wikimedia, public domain

-

:::

Learn more about computer architecture in general.

This needs some heavy edits. For example, perhaps the whole third paragraph, “One recent 64-bit x86…” could be cut. –MF, 11/8/17

The memory hierarchy

For a given cost of circuit hardware, the bigger the memory, the slower it works. For this reason, computers don’t just have one big chunk of memory. There will be a small number of registers inside the processor itself, usually between 8 and 16 of them. The “size” (number of bits) of a data register is equal to the width of the architecture.

The computer’s main memory, these days, is measured in GB (gigabytes, or billions of bytes). A memory of that size can’t be fast enough to keep up with a modern processor. Luckily, computer programs generally have locality of reference, which means that if the program has just made use of a particular memory location, it’s probably going to use a nearby location next. So a complete program may be very big, but over the course of a second or so only a small part of it will be needed. Therefore, modern computers are designed with one or more cache memories—much smaller and therefore faster—between the processor and the main memory. The processor makes sure that the most recently used memory is copied into the cache.

One recent 64-bit x86 processor has a first level (L1) cache of 64KB (thousands of bytes) inside the processor chip, a larger but slower L2 cache of 256 KB, also inside the processor, and an L3 cache of up to 2 MB (megabytes, millions of bytes) outside the processor. Each level of cache has a copy of the most recently used parts of the next level outward: the L1 cache copies part of the L2 cache, which copies part of the L3 cache, which copies part of the main memory. Data in the L1 cache can be accessed by the processor about as fast as its internal registers, and each level outward is a little slower. Hardware in the processor handles all this complexity, so that programmers can write programs as if the processor were directly connected to the main memory.

Second sourcing

Intel licenses other chip manufacturers to build processors that use the same architecture as Intel’s processors. Why do they do that? Wouldn’t they make more money if people had to buy from Intel? The reason is that computer manufacturers, such as Dell, Apple, and Lenovo, won’t build their systems around an architecture that is only available from one company. They’re not worried that Intel will go out of business; the worry is that there may be a larger-than-expected demand for a particular processor, and Intel may not be able to fill orders on time. But if that processor is also available from other companies such as AMD and Cyrix, then a delay at Intel won’t turn into a delay at Dell. Those other chip manufacturers may not use the same circuitry as the Intel version, as long as they behave the same at the architecture level.

-

Learning enough about the Analytical Engine to be able to write even a simple program for it is quite a large undertaking. Don’t try it until after the AP exam, but if you are interested, there are extensive online resources available here:

This has a lot of numbers in it which make it harder to read, but more importantly it’s so abstract and doesn’t really talk about anything familiar, which given the hint title “PC/Mac” I was expecting. Needs some work. –MF, 11/8/17