Page 1: A Brief History of Computers

Unit 6, Lab 2, Page 1

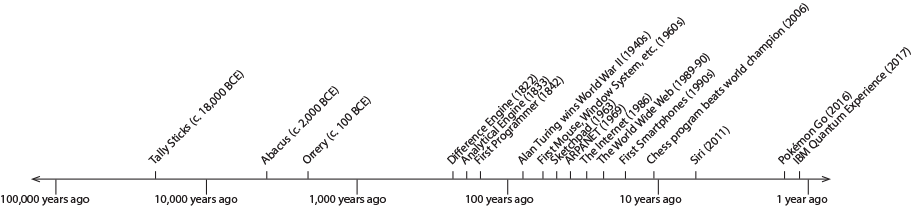

On this page, you will explore the long history of devices used for computation.

When was the first computer built? That depends on where you draw the line between computers and non-computers.

Question 1: Answer this on the Google Form

- Explore the timeline below, which shows selected events in the history of computing starting 20,000 years ago. Decide what you think makes sense to call the first real computer. Why?

-

Tally Sticks - First known computing aid.

Tally Sticks (c. 18,000 BCE)

20,000 years ago people cut patterns of notches into animal bones. Some experts believe that tally sticks were used to perform arithmetic computations.

-

Abacus - Ancient calculator, still in use.

The Abacus (c. 2,000 BCE)

The abacus is a computing device invented about 4,000 years ago. People who are well-trained to use it can perform calculations remarkably quickly, including square roots and cube roots on multi-digit numbers. In some countries, the abacus is still widely used today.

Image by Wikimedia user HB, Public Domain.

The computation algorithms are executed by the user, not the device.

-

Orrery - Mechanical device that computes planets’ orbits.

The Orrery (c. 100 BCE)

Since antiquity, many special-purpose computing devices have been invented. For example this orrery displayed the positions of the planets in the Solar System. The first known device of this kind dated from about 2100 years ago. People at that time generally believed that the sun and other planets all revolved around the Earth, making the positions very complicated to work out, so the mechanism was much harder to design and build than it would be today.

No machine-readable image author provided. Marsyas assumed (based on copyright claims). GFDL, CC-BY-SA-3.0, or CC BY 2.5, via Wikimedia Commons.

Here is a modern example of an orrery.

Image by flickr userKaptain Kobold, licensed under the Creative Commons Attribution 2.0

-

Difference Engine - Charles Babbage: Mechanical single-purpose computer.

The Difference Engine (c. 1822)

In 1822, Charles Babbage designed a device he called a “Difference Engine.” Made of precisely milled metal gears, it would compute and print tables of numbers, like log or trig functions. See more on the digital architecture page.

-

Analytical Engine - Babbage: First stored-program computer.

The Analytical Engine (c. 1833)

The Difference Engine could compute various functions by manually setting the starting position of its gears, but it had only one algorithm, built into the hardware design. In 1833, Babbage began a more ambitious project, the Analytical Engine. It was based on the general idea of the Difference Engine, but with a crucial difference: It could carry out instructions in a primitive programming language, prepared on punched cards. See more on the digital architecture page.

-

First Programmer - Ada Lovelace: First to understand the potential of programs for non-numeric data.

The First Programmer (c. 1842)

Babbage’s design was very versatile, but his interest was still mainly in printing tables of numbers. It was his collaborator Ada, Countess Lovelace, who first recognized that the numbers in Babbage’s computer could be used not only as quantities but also as representing musical notes, text characters, and so on. See more on the digital architecture page.

Image by Alfred Edward Chalon, Science & Society Picture Library, Public Domain, via Wikimedia.

-

Alan Turing wins World War II - Alan Turing leads a team that breaks the Enigma code using a very early electronic programmable computer.

Alan Turing Wins World War II (1940s)

Well, we’re exaggerating. Many people contributed to the Nazi defeat. But they weren’t all soldiers; some were mathematicians. Turing, who went on to develop the beginning ideas of computer science and to prove the Halting Theorem, which you saw in Unit 5, Lab 4: An Undecidable Problem, was the leader of a British team of mathematicians who made a breakthrough in decrypting messages encrypted with the German Enigma machine.

Cryptanalysis of the Enigma enabled western Allies in World War II to read substantial amounts of secret Morse-coded radio communications of the Axis powers. The western Supreme Allied Commander Dwight D. Eisenhower considered the military intelligence from this and other decrypted Axis radio and teleprinter transmissions “decisive” to the Allied victory…

Alan Turing, a Cambridge University mathematician and logician, provided much of the original thinking and designed the device. Engineer Harold “Doc” Keen turned Turing’s ideas into a working machine. (adapted from Cryptanalysis of the Enigma, Wikipedia CC-BY-SA)

-

First Mouse, Window System, etc. - Douglas Engelbart: An amazing number of firsts, including first hypertext, first collaborative editing, first video calling, much more.

“The mother of all demos” (1960s)

The first public demonstration in 1968 of a mouse, colleagues separated by miles working on the same screen, and many other technologies we now take for granted was so astonishing to the audience that it became known as “the mother of all demos.” (Just search that name on Wikipedia or YouTube.)

The inventor of this system, Douglas Engelbart, had a lifelong interest in using technology to augment human intelligence, in particular to support collaboration among people physically distant. He studied people doing intellectual work and noted that when they’re not using a computer they don’t sit rigidly in front of their desks; they wheel their chairs around the room as they grab books or just think. So he designed an office chair attachment of a lapboard containing a keyboard and a mouse—the first mouse. He also invented a way for people to collaborate on the same page at the same time, seeing each others’ mouse cursors. Documents created in the system had hyperlinks to other documents, long before the Web was invented and used this idea. There were lots of smaller firsts, too, such as a picture-in-picture display of the other person’s face camera, long before Skype. People remember Engelbart mostly because of the mouse, but he pioneered many features of the modern graphical user interface (GUI).

-

Sketchpad - Ivan Sutherland: First object-oriented programming system, early interactive display graphics.

Sketchpad (1963)

Ivan Sutherland’s 1963 Ph.D. thesis project, a program to help in drawing blueprints from points, line segments and arcs of circles, pioneered both object oriented programming and the ability to draw on a screen (using a light pen; the mouse hadn’t been invented yet). It was one of the first programs with an interactive graphical user interface, so people who weren’t computer programmers could use it easily.

Search for “Sketchpad” on YouTube to see a demonstration of this software.

-

ARPANET - The first version of what became the Internet.

ARPANET (1969)

The Advanced Research Projects Agency

(ARPA) of the US Defense Department was and is still the main funder of computer science research in the United States. In the late 1960s through the early 1980s they supported the development of a network connecting mainly universities with ARPA-funded projects, along with a few military bases. The initial network in 1969 consisted of four computers, three in California and one in Utah. At its peak, around 1981, there were about 200 computers on the net. With such a small number of computers, each of them knew the name and “host number” of all the others. Special gateway computers called IMPs (Interface Message Processors) were used to connect the host computers to the network, like a router today.Only organizations with ARPA research grants could be on the net. This meant almost all the network sites were universities, along with a small number of technology companies doing work for ARPA. Everyone knew everyone, and so the network was built around trust. People were encouraged to use other sites’ resources; there was a yearly published directory of all the network computers, including, for most of them, information on how to log in as a guest user. It was a much friendlier spirit than today’s Internet, with millions of computers and millions of users who don’t know or trust each other. But the friendly spirit was possible only because access to the ARPANET was strongly restricted.

The architects of the ARPANET knew that a system requiring every computer to know the address of every other computer wasn’t going to work for a network accessible to everyone. Their plan was to build a network of networks—the Internet. The TCP/IP protocol stack was designed and tested on the ARPANET.

-

The Internet - First network of networks, based on TCP/IP.

Internet (1986)

Gradually the ARPANET was divided into pieces. The first big pieces were MILNET for military bases and NSFnet, established in 1986 by the National Science Foundation, for research sites. This split was the beginning of the Internet. Smaller regional networks were created. Communication companies such as AT&T created commercial networks that anyone could join. Network access spread worldwide through satellite radio and through undersea cables. In 1995 the NSFnet was abolished, and everyone connected to the net through commercial Internet Service Providers.

-

The World Wide Web - Tim Berners-Lee: First widely available hypertext (clickable links) system.

World Wide Web (1989-90)

In the early days of the net, the main application-level protocols were Telnet, which allowed a user to log in remotely to another computer, and FTP (File Transfer Protocol), for copying files from one computer to another. FTP is great if you already know what you’re looking for, and exactly where it is in the other computer’s file system.

Several people had the idea of a system that would allow users to embed links to files into a conversation, so you could say “I think this document might help you.” In 1945, Vannevar Bush described a hypothetical device with the ability to embed links in files. In 1963, Ted Nelson made up the word “hypertext” as the name for this feature, but the first actual implementation was Douglas Engelbart’s system NLS, developed starting in 1963 and demonstrated in 1968.

In 1989, physicist Tim Berners-Lee implemented a hypertext system and named it the World Wide Web. At first it was used only by physicists, to share data and ideas. But by then the Internet was running, so his timing was right, and the name was much more appealing than the technical-sounding “hypertext” (although, as you saw in Unit 4, Lab 1: What Is the Internet?.

Berners-Lee’s vision was of a Web in which everyone would be both a creator and a consumer of information. But the Web quickly became a largely one-way communication, with a few commercial websites getting most of the traffic. Today, most Web traffic goes to Google, Facebook, CNN, Amazon, and a few others. But some degree of democracy came back to the Web with the invention of the blog (short for “web log”) in which ordinary people can post their opinions and hope that other people will notice them.

-

First Smartphones - First portable devices combining cell phone with pocket computer.

Smartphones (1990s)

The first device that could be thought of as a smartphone was demonstrated in 1992 and available for sale in 1994. But through the 1990s, people who wanted portable digital devices could get a cell phone without apps, and a “personal digital assistant” (PDA) that did run apps but couldn’t make phone calls. Around 1999, a few companies developed devices with the phone and the PDA in one housing, sharing the screen but essentially two separate computers in one box. The Kyocera 6035, in the photo, was a telephone with the flap closed, but was a Palm Pilot PDA with the flap open.

Kyocera 6035 image by KeithTyler, Public Domain

It was in the late 2000s that the two functions of a smartphone were combined in a single processor, with the telephone dialer as just one application-level program.

-

Chess program beats world champion - Deep Fritz program beats Vladimir Kramnik.

Chess program beats world champion (2006)

Programmers have been trying to design and build chess programs since at least 1941 (Konrad Zuse). Over the following decade, several of the leading computer science researchers (including Alan Turing, in 1951) published ideas for chess algorithms, but the first actual running programs came in 1957. In 1978, a chess program won a game against a human chess master, David Levy, but Levy won the six-game match 4½–1½. (The half points mean that one game was a tie.)

There are only finitely many possible chess positions, so in principle a program could work through all possible games and produce a complete dictionary of the best possible move for each player from each position. But there are about 1043 board positions, and in 1950, Claude Shannon estimated that there are about 10120 possible games, far beyond the capacity of even the latest, fastest computers. Chess programs, just like human players, can only work out all possible outcomes of the next few moves, and must then make informed guesses about which possible outcome is the best.

A turning point in computer chess came in 1981, when the program Cray Blitz was the first to win a tournament, beat a human chess master, and earn a chess master rating for itself. The following year, the program Belle became the second computer program with a chess master rating. In 1988 two chess programs, HiTech and Deep Thought, beat human chess grandmasters.

In 1997, Deep Blue, an IBM-built special-purpose computer just to play chess, with 30 processors plus 480 special-purpose ICs to evaluate positions, beat world chess champion Garry Kasparov in a six-game match, 3½–2½. Its special hardware allowed Deep Blue to evaluate 200 million board positions per second.

In 2006, world chess champion Vladimir Kramnik was defeated 4-2 in a six-game match by Deep Fritz, a chess program running on an ordinary computer. Although the score looks overwhelming, one of the games Deep Fritz won was almost a win for Kramnik, who failed to see a winning move for himself and instead set up the computer for a one-move checkmate. Without this blunder, the match would have been tied 3–3.

Chess programs continue to improve. In 2009, the program Pocket Fritz 4, running on a cell phone, won a tournament and reached grandmaster rating. The program, in contrast to Deep Blue, could evaluate only 20,000 positions per second, so this win shows an improvement in strategy, not just an improvement in brute force computer speed.

-

Siri - Apple’s personal assistant for the iPhone.

Siri (2011)

Siri is Apple’s personal assistant software. It was first released as a third-party app in the App Store in 2010; Apple then bought the company that made it, and included Siri as part of iOS in 2011.

Siri was not the first program to understand speech. Dragon Dictate, a speech-to-text program was released in 1990. Research laboratory efforts started much earlier than that; in 1952, a program developed at Bell Labs was able to understand spoken digits. As time went on, the number of words understood by the programs increased. In 2002, Microsoft introduced a version of its Office programs (including Word) that would take spoken dictation.

In 2006, the National Security Administration (NSA) started using software to recognize keywords in the telephone calls it spies on.

The first cell phone app using speech recognition was Google’s Voice Search in 2008, but it just entered the words it heard in a search bar without trying to understand them. What was new in Siri wasn’t speech recognition, but its ability to understand the sentences spoken by its users as commands to do something: “Call Fred,” “Make an appointment with Sarah for 3pm tomorrow,” and so on.

Reviews of Siri’s performance in 2011 weren’t all good. It had trouble understanding Southern US or Scottish accents. It had a lot of trouble with grammatically ambiguous sentences. Its knowledge of local landmarks was spotty. Nevertheless, it prompted a new surge of buyers of Apple telephones.

More recently, Microsoft (Cortana), Amazon (Alexa), and Google (Assistant) have introduced competing speech-based personal assistant programs.

-

Pokémon Go - First widely used augmented reality game.

Pokémon Go (2016)

Augmented reality is a technique in which the user sees the real world, but with additional pictures or text superimposed on it. Although it had been used earlier, the first major public exposure to augmented reality was in the game Pokémon Go. Players walk around while looking at their phone screens, which show what the camera is seeing, but with the occasional addition of a cartoon character for the player to catch. Every player looking at the same place sees the same character, because the game uses the phone’s GPS to locate the player.

The programming of the game was impressive, but even more impressive was the effort the developers put into placing the cartoon characters at locations around the world that are accessible, open to the public, and not offensive. (Niantic, the company that developed the game, had to remove some locations from their list because of complaints, including cemeteries and Holocaust museums.)

The game was downloaded over 500 million times in 2016, and, unusually for a video game, was enthusiastically supported by many players’ parents, because the game gets players out of the house, and getting exercise from walking around. Also, because players congregate at the locations of Pokémon, the game encouraged real-life friendships among players. On the other hand, there were safety concerns, partly because players would cross streets staring into their phones instead of watching for traffic, and partly because certain Pokémon were placed in front of fire stations, or in locations that encouraged players to cross railroad tracks.

-

IBM Quantum Experience - A 16-qubit quantum computer available for free use on the Internet.

IBM Quantum Experience (QX) (2017)

IBM first put a five-qubit (quantum bit) quantum computer on the Internet in 2016 (see Quantum Computing - IBM Q - US), but the following year they added a much more powerful 16-qubit computer. (The qubit is the equivalent for quantum computers of a bit in ordinary computers.) Anyone can use it, free of charge, although there is always a waiting list for appointments.

The usual oversimplified description of quantum computing is that a qubit (pronounced “Q bit”) is “both zero and one at the same time.” It’s closer to say that a qubit is either zero or one, but we don’t know which until it is examined at the end of a computation, at which point it becomes an ordinary bit with a fixed value. This means that a quantum computer with 16 qubits isn’t quite as powerful as 216

separate computers trying every possible combination of bit values in parallel. We know that certain exponential-time algorithms can be solved in polynomial time by quantum computers, and we know that certain others can’t, but there is still a big middle ground of exponential-time algorithms for which we don’t know how fast quantum computers can be.The IBM QX has been used for a wide variety of quantum computations, ranging from academic research to a multiplayer Quantum Battleship game.

Question 2: Answer this on the Google Form

-

Discussion:

- Does a device have to be programmable to be a computer?

- Does it have to operate by itself?

Here are two key ideas:

-

Software, in the form of a program stored in the computer’s memory, is, itself, a kind of abstraction. It is what makes a computer usable for more than one purpose.

- We didn’t get usable computers until there was an underlying technology (the transistor) small enough, inexpensive enough, and fast enough to support the program abstraction.